A Unified Alternating Direction Method of Multipliers by Majorization Minimization

Abstract

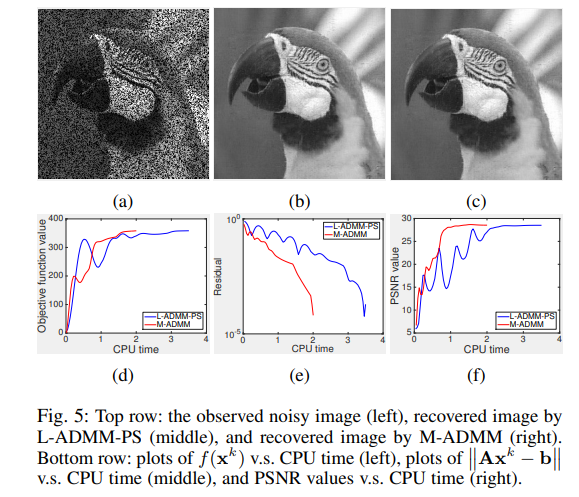

Accompanied with the rising popularity of compressed sensing, the Alternating Direction Method of Multipliers (ADMM) has become the most widely used solver for linearly constrained convex problems with separable objectives. In this work, we observe that many existing ADMMs update the primal variable by minimizing different majorant functions with their convergence proofs given case by case. Inspired by the principle of majorization minimization, we respectively present the unified frameworks of Gauss-Seidel ADMMs and Jacobian ADMMs, which use different historical information for the current updating. Our frameworks generalize previous ADMMs to solve the problems with non-separable objectives. We also show that ADMMs converge faster when the used majorant function is tighter. We then propose the Mixed Gauss-Seidel and Jacobian ADMM (M-ADMM) which alleviates the slow convergence issue of Jacobian ADMMs by absorbing merits of the Gauss-Seidel ADMMs. M-ADMM can be further improved by backtracking and wise variable partition. We also propose to solve the multi-blocks problems by Proximal Gauss-Seidel ADMM which is of the Gauss-Seidel type. It convegences for non-strongly convex objective. Experiments on both synthesized and real-world data demonstrate the superiority of our new ADMMs. Finally, we release a toolbox that implements efficient ADMMs for many problems in compressed sensing.