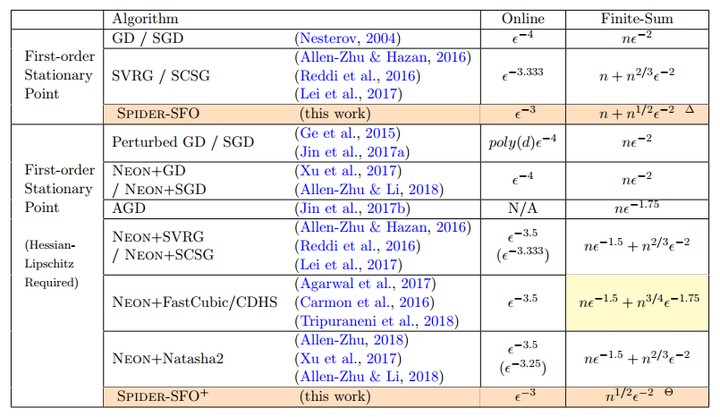

SPIDER Near-Optimal Non-Convex Optimization via Stochastic Path-Integrated Differential Estimator

Abstract

In this paper, we propose a new technique named Stochastic Path-Integrated Differential EstimatoR (Spider), which can be used to track many deterministic quantities of interest with significantly reduced computational cost. We apply Spider to two tasks, namely the stochastic first-order and zeroth-order methods. For stochastic first-order method, combining Spider with normalized gradient descent, we propose two new algorithms, namely Spider-SFO and Spider-SFO+, that solve non-convex stochastic optimization problems using stochastic gradients only. We provide sharp error-bound results on their convergence rates. In special, we prove that the Spider-SFO and Spider-SFO+ algorithms achieve a record-breaking gradient computation cost of O(min(n^{1/2}eps^{-2}, eps^{-3})) for finding an eps-approximate first-order and O(min(n^{1/2}eps^{-2}+eps^{-2.5}, eps^{-3})) for finding an (eps, O(eps^{0.5}))-approximate second-order stationary point, respectively. In addition, we prove that Spider-SFO nearly matches the algorithmic lower bound for finding approximate first-order stationary points under the gradient Lipschitz assumption in the finite-sum setting. For stochastic zeroth-order method, we prove a cost of O(min(n^{1/2}eps^{-2}, eps^{-3})) which outperforms all existing results.