Laplacian PCA and Its Applications

Abstract

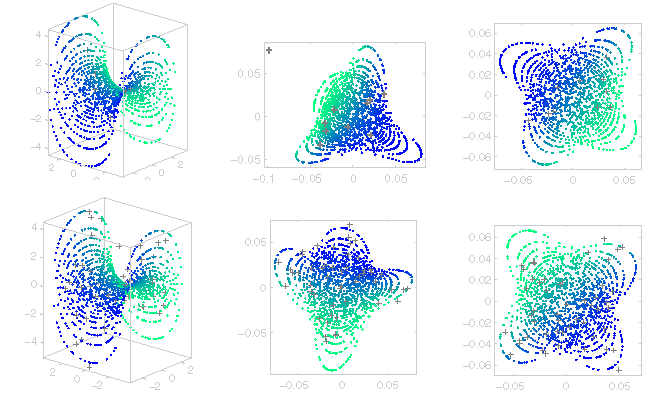

Dimensionality reduction plays a fundamental role in data processing, for which principal component analysis (PCA) is widely used. In this paper, we develop the Laplacian PCA (LPCA) algorithm which is the extension of PCA to a more general form by locally optimizing the weighted scatter. In addition to the simplicity of PCA, the benefits brought by LPCA are twofold: the strong robustness against noise and the weak metric-dependence on sample spaces. The LPCA algorithm is based on the global alignment of locally Gaussian or linear subspaces via an alignment technique borrowed from manifold learning. Based on the coding length of local samples, the weights can be determined to capture the local principal structure of data. We also give the exemplary application of LPCA to manifold learning. Manifold unfolding (non-linear dimensionality reduction) can be performed by the alignment of tangential maps which are linear transformations of tangent coordinates approximated by LPCA. The superiority of LPCA to PCA and kernel PCA is verified by the experiments on face recognition (FRGC version 2 face database) and manifold (Scherk surface) unfolding.