Linearized Alternating Direction Method with Parallel Splitting and Adaptive Penalty for Separable Convex Programs in Machine Learning

Abstract

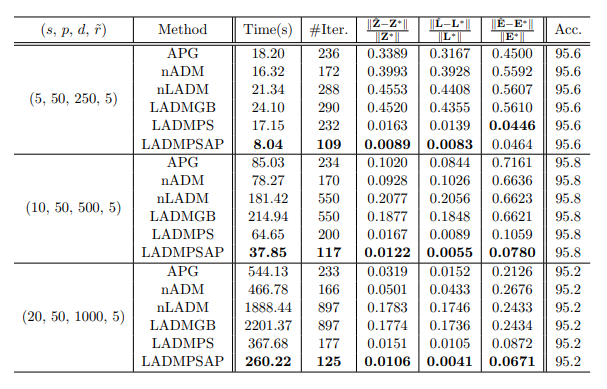

Many problems in statistics and machine learning (e.g., probabilistic graphical model, feature extraction, clustering and classification, etc) can be (re)formulated as linearly constrained separable convex programs. The traditional alternating direction method (ADM) or its linearized version (LADM) is for the two-variable case and cannot be naively generalized to solve the multi-variable case. In this paper, we propose LADM with parallel splitting and adaptive penalty (LADMPSAP) to solve multi-variable separable convex programs efficiently. When all the component objective functions have bounded subgradients, we obtain convergence results that are stronger than those of ADM and LADM, e.g., allowing the penalty parameter to be unbounded and proving the sufficient and necessary conditions for global convergence. We further propose a simple optimality measure and reveal the convergence rate of LADMPSAP in an ergodic sense. For programs with extra convex set constraints, we devise a practical version of LADMPSAP for faster convergence. LADMPSAP is particularly suitable for sparse representation and low-rank recovery problems because its subproblems have closed form solutions and the sparsity and low-rankness of the iterates can be preserved during the iteration. It is also highly parallelizable and hence fits for parallel or distributed computing. Numerical experiments testify to the speed and accuracy advantages of LADMPSAP.