Abstract

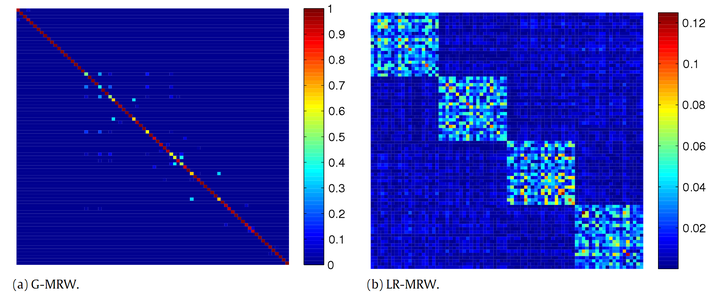

Markov Random Walks (MRW) has proven to be an effective way to understand spectral clustering and embedding. However, due to less global structural measure, conventional MRW (e.g., the Gaussian kernel MRW) cannot be applied to handle data points drawn from a mixture of subspaces. In this paper, we introduce a regularized MRW learning model, using a low-rank penalty to constrain the global subspace structure, for subspace clustering and estimation. In our framework, both the local pairwise similarity and the global subspace structure can be learnt from the transition probabilities of MRW. We prove that under some suitable conditions, our proposed local/global criteria can exactly capture the multiple subspace structure and learn a low-dimensional embedding for the data, in which giving the true segmentation of subspaces. To improve robustness in real situations, we also propose an extension of the MRW learning model based on integrating transition matrix learning and error correction in a unified framework. Experimental results on both synthetic data and real applications demonstrate that our proposed MRW learning model and its robust extension outperform the state-of-the-art subspace clustering methods.