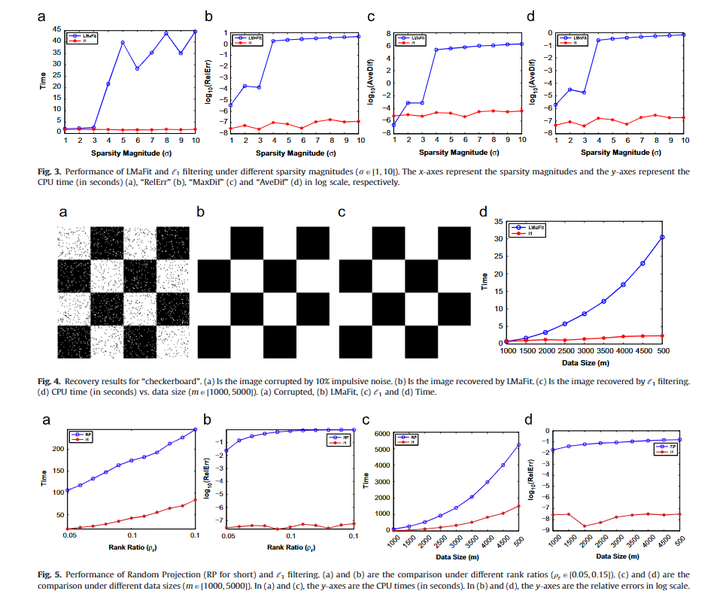

Abstract

In the past decades, exactly recovering the intrinsic data structure from corrupted observations, which is known as Robust Principal Component Analysis (RPCA), has attracted tremendous interests and found many applications in computer vision and pattern recognition. Recently, this problem has been formulated as recovering a low-rank component and a sparse component from the observed data matrix. It is proved that under some suitable conditions, this problem can be exactly solved by Principal Component Pursuit (PCP), i.e., minimizing a combination of nuclear norm and ℓ1 norm. Most of the existing methods for solving PCP require Singular Value Decompositions (SVDs) of the data matrix, resulting in a high computational complexity, hence preventing the applications of RPCA to very large scale computer vision problems. In this paper, we propose a novel algorithm, called ℓ1 filtering, for exactly solving PCP with an complexity, where m×n is the size of data matrix and r is the rank of the matrix to recover, which is supposed to be much smaller than m and n. Moreover, ℓ1 filtering is highly parallelizable. It is the first algorithm that can exactly solve a nuclear norm minimization problem in linear time (with respect to the data size). As a preliminary investigation, we also discuss the potential extensions of PCP for more complex vision tasks encouraged by ℓ1 filtering. Experiments on both synthetic data and real tasks testify the great advantage of ℓ1 filtering in speed over state-of-the-art algorithms and wide applications in computer vision and pattern recognition societies.