Subspace Clustering under Complex Noise

Abstract

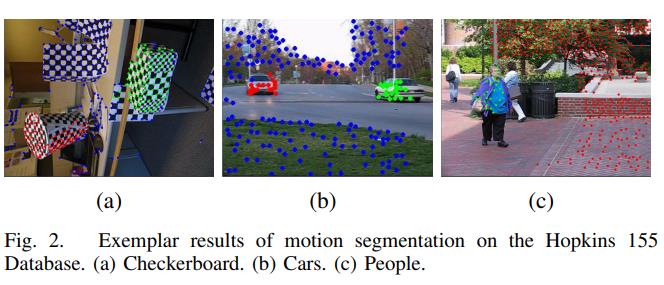

In this paper, we study the subspace clustering problem under complex noise. A wide class of reconstruction-based methods model the subspace clustering problem by combining a quadratic data-fidelity term and a regularization term. In a statistical framework, the data-fidelity term assumes to be contaminated by a unimodal Gaussian noise, which is a popular setting in most current subspace clustering models. However, the realistic noise is much more complex than our assumptions. Besides, the coarse representation of the data-fidelity term may depress the clustering accuracy, which is often used to evaluate the models. To address this issue, we propose the mixture of Gaussian regression (MoG Regression) for subspace clustering. The MoG Regression seeks a valid way to model the unknown noise distribution, which approaches the real one as far as possible, so that the desired affinity matrix is better at characterizing the structure of data in the real world, and furthermore, improving the performance. Theoretically, the proposed model enjoys the grouping effect, which encourages the coefficients of highly correlated points are nearly equal. Drawing upon the ideal of the minimum message length, a model selection strategy is proposed to estimate the numbers of the Gaussian components that shows a way how to seek the number of Gaussian components besides determining it by empirical value. In addition, the asymptotic property of our model is investigated. The proposed model is evaluated on the challenging datasets. The experimental results show that the proposed MoG Regression model significantly outperforms several state-of-the-art subspace clustering methods.