Accelerated Alternating Direction Method of Multipliers: an Optimal O(1/K) Nonergodic Analysis

Abstract

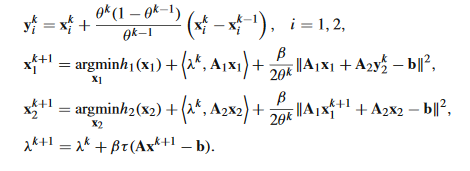

The Alternating Direction Method of Multipliers (ADMM) is widely used for linearly constrained convex problems. It is proven to have an O(1/√K) nonergodic convergence rateand a faster O(1/K) ergodic rate after ergodic averaging, where K is the number of iterations. Such nonergodic convergence rate is not optimal. Moreover, the ergodic averaging maydestroy the sparseness and low-rankness in sparse and low-rank learning. In this paper, wemodify the accelerated ADMM proposed in Ouyang et al. (SIAM J. Imaging Sci. 7(3):1588¨C1623,2015) and give an O(1/K) nonergodic convergence rate analysis, which satisfies|F(x^K)-F(x^)| \le O(1/K),||Ax^K-b||\leO(1/K) and x^K has a more favorable sparseness and low-rankness than the ergodic peer, where F(x) is the objective function and Ax=b is the linear constraint. As far as we know, this is the first O(1/K) nonergodic convergentADMM type method for the general linearly constrained convex problems. Moreover, weshow that the lower complexity bound of ADMM type methods for the separable linearlyconstrained nonsmooth convex problems is O(1/K), which means that our method is optimal.