Joint Latent Space Learning and Regression for Cross Modal Retrieval

Abstract

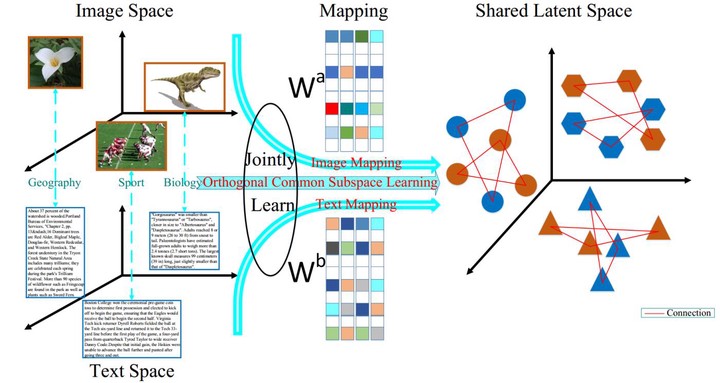

Cross-modal retrieval has received much attention in recent years. It is a commonly used method to project multi-modality data into a common subspace and then retrieve. However, nearly all existing methods directly adopt the space defined by the binary class label information without learning as the shared subspace for regression. In this paper, we first adopt the spectral regression method to learn the optimal latent space shared by data of all modalities based on the orthogonal constraints. Then we construct a graph model to project the multi-modality data into the latent space. Finally, we combine these two processes together to jointly learn the latent space and regress. We conduct extensive experiments on multiple benchmark datasets and our proposed method outperforms the state-of-the-art approaches