Accelerated First-Order Optimization Algorithms for Machine Learning

Abstract

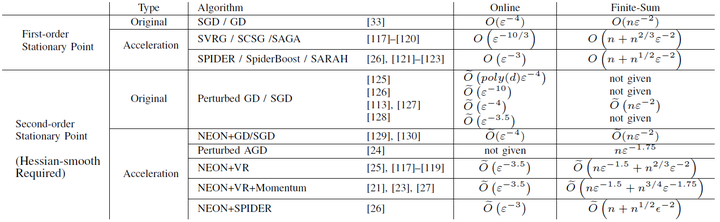

Numerical optimization serves as one of the pillars of machine learning. To meet the demands of big data applications, lots of efforts have been done on designing theoretically and practically fast algorithms. This paper provides a comprehensive survey on accelerated first-order algorithms with a focus on stochastic algorithms. Specifically, the paper starts with reviewing the basic accelerated algorithms on deterministic convex optimization, then concentrates on their extensions to stochastic convex optimization, and at last introduces some recent developments on acceleration for nonconvex optimization.

Type

Publication

Proceedings of the IEEE